In this blog post, we will delve into Google's PaLM API, an exciting addition announced at Google I/O 2023. We'll explore its key functionalities, how to obtain access, and how to leverage its capabilities. Please note that this tutorial aims to provide a brief introduction to the PaLM API, particularly for developers, data scientists, ML engineers, and anyone interested in exploring its potential. So, let's dive into the world of PaLM 2 Models!

If you are a visual learner, check out this video tutorial where I demonstrate how to access the PaLM API.

Introduction

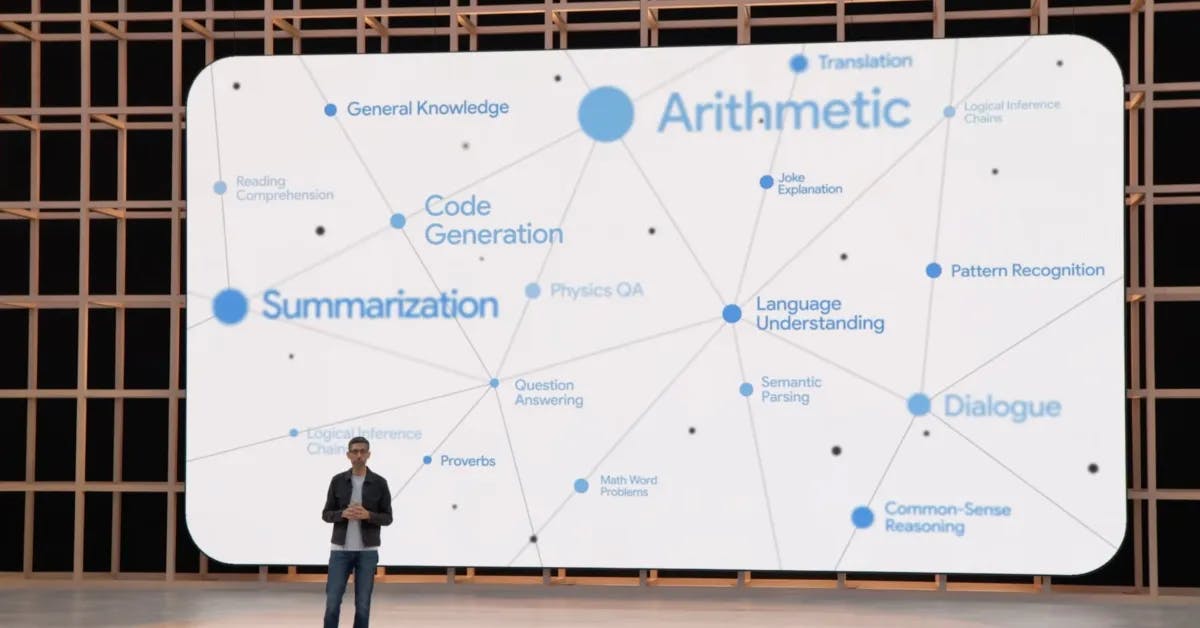

PaLM 2 models are Google AI's impressive large language models (LLMs). Specifically, the large PaLM 2 model is a 540-billion parameter LLM trained on an extensive dataset encompassing text and code. It possesses the ability to generate text, perform language translation, create various forms of creative content, and offer informative answers to questions. PaLM 2 can enhance the performance of numerous applications, such as improving machine translation accuracy, text generation quality, and code completion tool efficiency.

Let's highlight some of the remarkable capabilities of PaLM2:

Generate Text: PaLM API and PaLM2 can generate text in diverse styles, including news articles, fiction, poetry, and code snippets.

Language Translation: PaLM2 excels at accurate language translation.

Creative Content Creation: PaLM2 can produce diverse forms of creative content, such as poems, code snippets, scripts, musical compositions, emails, letters, and more.

Informative Question-Answering: PaLM2 can provide informative answers to open-ended, challenging, or unusual questions.

PaLM API:

The PaLM API is a publicly accessible interface that empowers developers to leverage the capabilities of PaLM 2. This powerful tool grants access to the creative potential of PaLM2 models.

While both PaLM API and PaLM 2 are still in development, you can explore and test them through the trusted tester program.

Features:

Text Completion: PaLM 2 can generate text that continues from a given prompt. For instance, if prompted to "Write a poem about a flower," PaLM 2 can generate a poem about a flower. It can also complete unfinished sentences or paragraphs.

Chat: PaLM 2 can engage in conversations with humans, understanding and responding to a wide array of prompts and questions. Whether you need directions, want to book a reservation, or seek assistance with a task, PaLM 2 has got you covered.

Embeddings: Embeddings involve converting text into a numerical vector representation, capturing its meaning. PaLM 2 model-produced embeddings can be utilized by developers to build applications leveraging their own data or external sources. These embeddings are compatible with popular open-source libraries like PyTorch, TensorFlow, Keras, and JAX, enabling seamless integration into downstream applications.

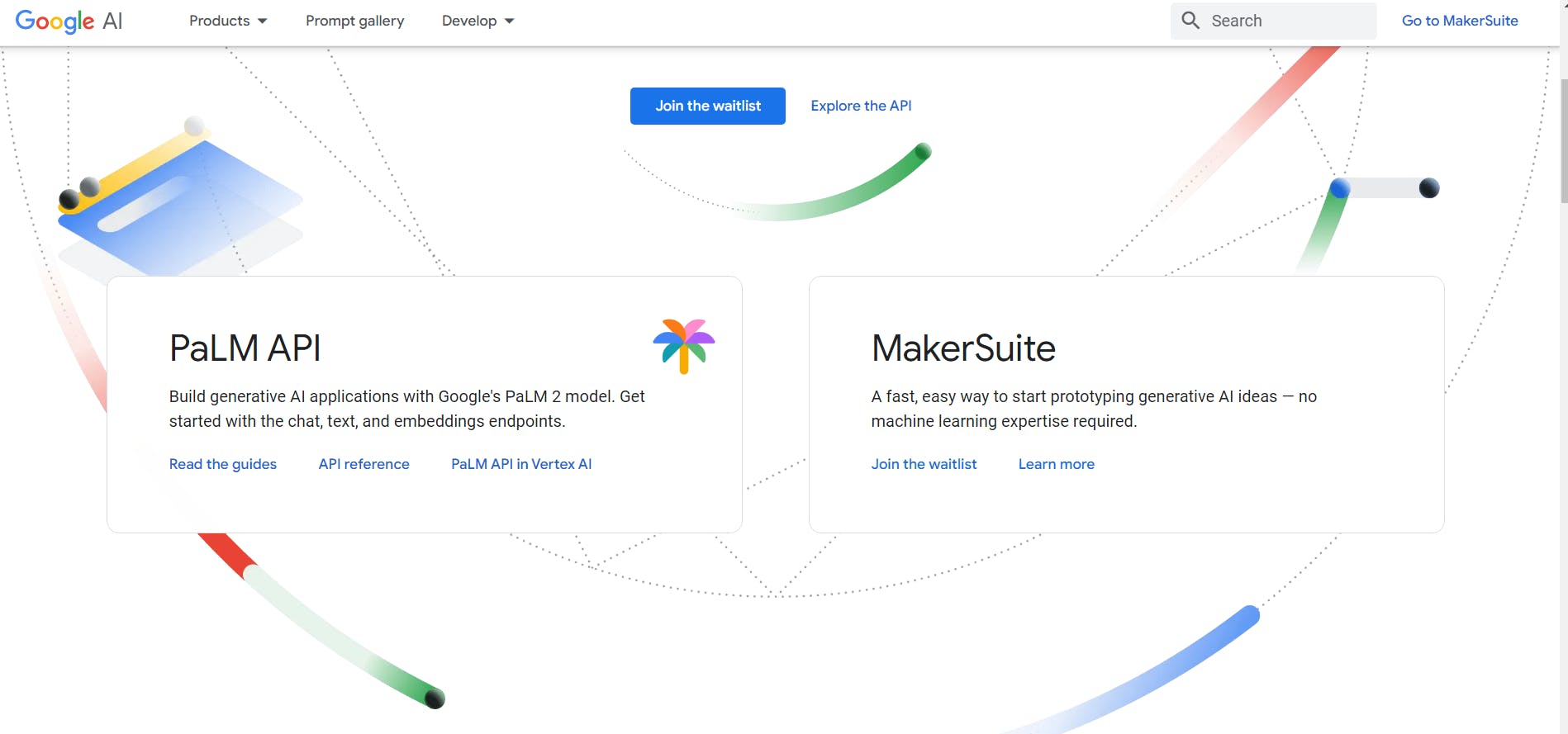

Accessing PaLM API:

To access the PaLM API, you can join the waiting list for the private preview through MakerSuite, a tool facilitating quick and easy prototyping for developers. Visit the following link to join the waiting list: [developers.generativeai.google]

Once your request is approved, you'll receive an invitation to test the PaLM API. Upon gaining access, you'll need to obtain an API key.

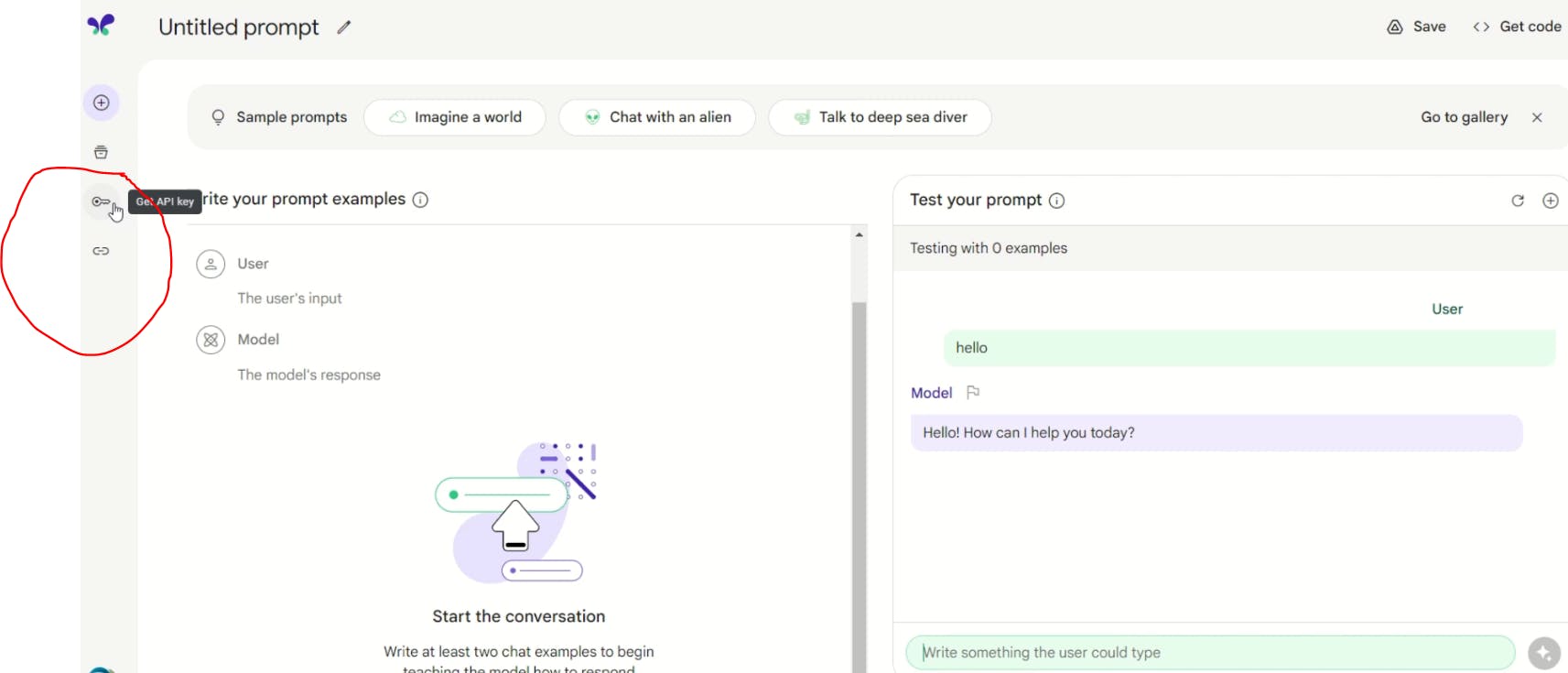

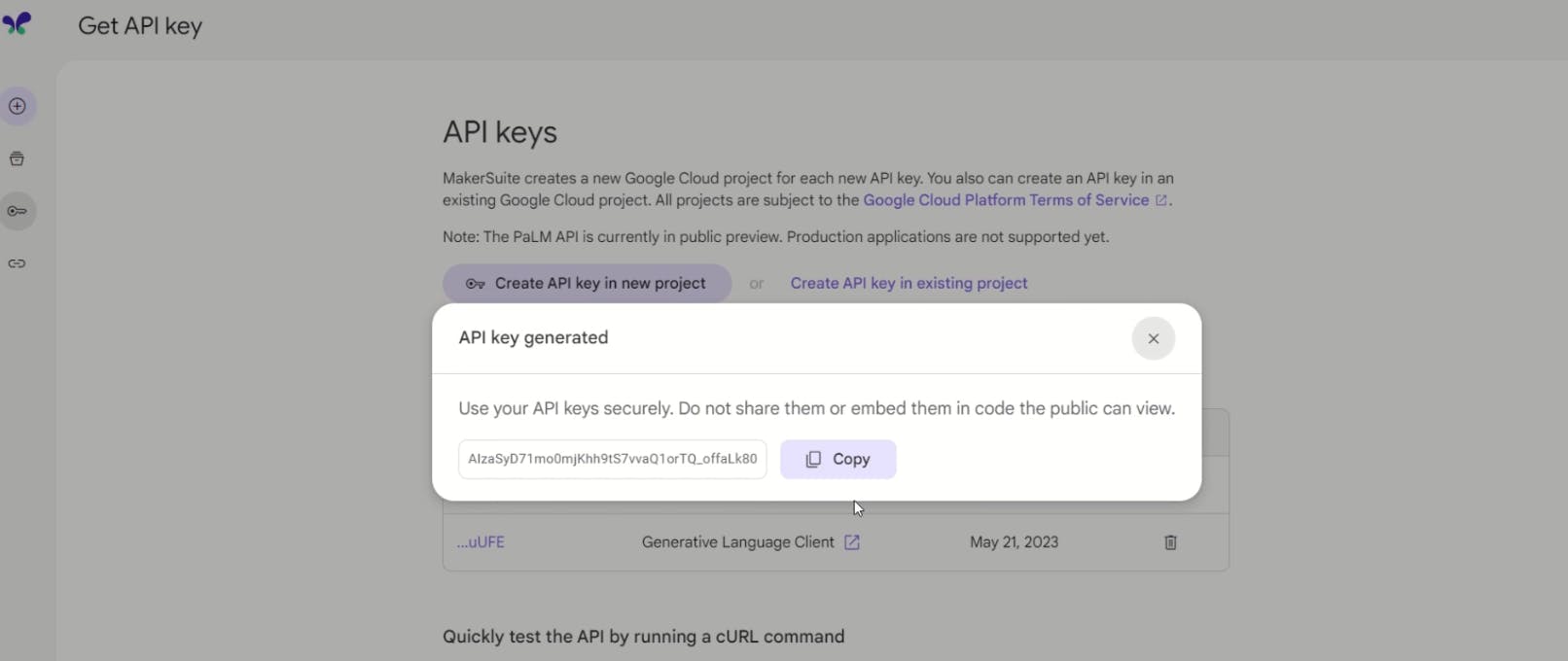

To begin, access the MakerSuite and click on the key icon located in the middle left section.

You'll be redirected to a new screen where you can create and copy your API key.

Installing packages:

!pip install -q google-generativeai

!pip install numpy

These two lines will install google-generativeai and numpy using pip, which is a package installer for Python. We will be using both of these packages so we need both of them.

Importing packages:

import pprint

import google.generativeai as palm

import numpy as np

These three lines import the necessary packages that we will need. The main package is palm, which will give us access to the models. The numpy package will be used to compute dot product (More on that later) and pprint is an optional package that helps print output in an easy-to-read format.

Configuring the API Key

To unlock the full potential of the PaLM API and access the cutting-edge PaLM2 language models, we need to set up the API key for authentication. Let's take a look at how to do that:

palm.configure(api_key='YOUR API KEY HERE')

By executing this line, we establish the API key that will be used to authenticate our API requests to the PaLM 2 language models.

Now, let's make our first request!

Exploring Available Models

To start off, let's examine the models currently available and the tasks they are designed for. It's important to note that both the API and the models are still under development, with new models continuously being added.

for m in palm.list_models():

print(m.name, '---------------------->', m.supported_generation_methods)

The code snippet above allows us to retrieve and display the list of currently available models and the corresponding supported generation methods. Here's an example output:

models/chat-bison-001 ----------------------> ['generateMessage']

models/text-bison-001 ----------------------> ['generateText']

models/embedding-gecko-001 ----------------------> ['embedText']

From the output, we can observe that the models/chat-bison-001 model is designed for chat-related tasks, models/text-bison-001 is suitable for text completion, and models/embedding-gecko-001 specializes in generating word embeddings.

Text Completion

Now, let's explore the text completion capabilities of the models/text-bison-001 model. For example, we'll complete the sentence prompt: "An apple a day..."

prompt = """

Complete this sentence, an apple a day...

"""

model = "models/text-bison-001"

max_output_tokens = 100

temperature = 1.0

completion = palm.generate_text(

model=model,

prompt=prompt,

temperature=temperature,

max_output_tokens=max_output_tokens,

candidate_count=2

)

print(completion.result)

In the above code snippet, we utilize the generate_text function to perform text completion using the specified model. By setting the appropriate parameters such as the model, prompt, temperature, max output tokens, and candidate count, we can fine-tune the generated text to suit our requirements.

For instance, the output may look like this:

Output: An apple a day keeps the doctor away.

Feel free to experiment with different parameter values to achieve the desired results.

Let's dissect the provided parameters:

model = "models/text-bison-001"sets the model to be used, specificallymodels/text-bison-001for text completion.max_output_tokens=100determines the maximum length of the generated text, restricting it to 100 tokens.temperature = 1.0controls the randomness of the model's output. A value between 0 and 1, where 0 represents determinism and 1 introduces high randomness.

The output of the model can be accessed through the completion.result property.

To explore multiple candidate predictions, we can access them individually:

print('Candidate 1:', completion.candidates[0]['output'])

print('Candidate 2:', completion.candidates[1]['output'])

Chat-based Interactions

In addition to text completion, the PaLM API also enables chat-based interactions. Let's take a look at how to initiate and continue a conversation using the API.

# Create a new conversation

response = palm.chat(prompt='Hello')

# The model's response can be accessed via the "last" property of the response object

pprint.pprint(response.last)

The code snippet above demonstrates how to initiate a conversation by sending the initial prompt, in this case, "Hello." The response object contains a property called "last" that provides the model's prediction or response.

To continue an existing chat, we can use the reply method and supply a reply as the input.

# Add to the existing conversation by sending a reply

response = response.reply("Who is the president of the USA")

# Obtain the model's latest response from the `last` field

response.last

The output may look like this:

Output: The current president of the United States is Joe Biden. He was elected in 2020 and took office on January 20, 2021. He is the 46th president of the United States.

Note that using the reply method is crucial for maintaining the model's memory of previous conversations.

Word Embeddings

Word embeddings play a vital role in natural language processing (NLP) tasks by representing words as numerical vectors in a high-dimensional space. These vectors capture the semantic relationships and contextual meanings of words based on their usage patterns.

These embeddings are typically derived from large corpora of text using models like Word2Vec.

For instance, consider the following sentences from our corpus:

"The cat is sitting on the mat."

"The dog is running in the park."

Using Word2Vec or a similar approach, we can represent each word in vector form. Let's assume we have chosen 2-dimensional vectors for simplicity:

cat = [0.2, 0.7]

dog = [0.8, 0.4]

sitting = [0.6, 0.3]

running = [0.9, 0.1]

mat = [0.1, 0.9]

park = [0.7, 0.6]

In this example, each word is represented by a vector with two numbers, indicating its position in the 2D space. Notably, "cat" and "dog" are closer to each other than they are to "mat" or "park." Similarly, "sitting" and "running" exhibit closer proximity compared to their distances from "cat" or "dog." These relative distances reflect the semantic relationships between the words.

Word embeddings empower us to perform various operations, such as vector arithmetic. For example, we can compute "king" - "man" + "woman" to obtain a resulting vector that closely aligns with "queen." This demonstrates the remarkable capability of word embeddings to capture semantic relationships and analogies between words.

With the PaLM API, we can generate state-of-the-art embeddings, enabling developers to build applications using their own data or external sources. These embeddings can be seamlessly integrated into downstream applications utilizing frameworks like TensorFlow, Keras, JAX, and other open-source libraries.

Let's take a practical example:

x = 'What do squirrels eat?'

close_to_x = 'nuts'

different_from_x = 'This morning I woke up in San Francisco and took a walk to the Bay Bridge. It was a good, sunny morning with no fog.'

model = "models/embedding-gecko-001"

# Generate embeddings

embedding_x = palm.generate_embeddings(model=model, text=x)

embedding_close_to_x = palm.generate_embeddings(model=model, text=close_to_x)

embedding_different_from_x = palm.generate_embeddings(model=model, text=different_from_x)

print(embedding_x)

In the above code snippet, we utilize the generate_embeddings function to create embedding vectors for the texts x, close_to_x, and different_from_x. We specify the model as "models/embedding-gecko-001" since it supports word embedding generation.

The resulting embedding for x may look like this:

Output: {'embedding': [-0.025894871, -0.021033963, 0.0035749928, 0.008222881, ...]}

Having generated the embeddings, we can leverage the dot product to measure the relatedness between close_to_x and different_from_x in comparison to x. The dot product returns a value between -1 and 1, representing the alignment between two vectors. Values closer to 0 indicate less similarity, while values closer to 1 signify greater similarity.

similar_measure = np.dot(embedding_x['embedding'], embedding_close_to_x['embedding'])

print(similar_measure)

The output may be as follows:

Output: 0.6929858887336946

The higher dot product value between the embeddings of x and close_to_x indicates a stronger relatedness than the embeddings of x and different_from_x.

different_measure = np.dot(embedding_x['embedding'], embedding_different_from_x['embedding'])

print(different_measure)

The output may be:

Output: 0.4356069925292835

Here, the lower dot product value between the embeddings of x and different_from_x demonstrates a lesser relatedness compared to the embeddings of x and close_to_x.

Conclusion

In conclusion, the PaLM API serves as a user-friendly gateway to powerful PaLM2 models, empowering developers to perform remarkable tasks within their applications. If you're interested in more articles on machine learning, make sure to follow for future updates.